These are the iseAuto dataset specifically for this work. iseAuto dataset was composed as camera and LiDAR-projection images, which are recorded by iseAuto shuttle's primary camera (FLIR Grasshopper3) and LiDAR (Velodyne VLP-32) sensors, respectively.

We provided two versions of the datasets. In the second version, there are some optimizations of the manual-labeled annotations in daytime subsets. The left and right images below are the same frames from v1 and v2 versions.

The optimization focuses on the consistency of object labeling. Because the manual labeling work was finished by several annotators. They hold the different standards to some small objects. This is an issue that should be avoided for the manual-labeled dataset that are going to be used in model training. Please note that all the testing results in paper are based on the v1 version of iseAuto dataset.

We also provied all raw bag files here. The iseAuto shuttle was operated upon ROS, therefore all sensory data was collected as ROS messages and stored as the bag files. You can extract the camera and LiDAR data from the bag files, then process them to fit the input requirement of your own models.

As described in the paper, the iseAuto dataset was partitioned as four subsets: day-fair, day-rain, night-fair, night-rain. There are 2000 frames of data for each subset. In each subset, there are 600 frames that have manual-labeled annotations of vehicle and human classes. The rest of 1400 frames have the machine-made annotations that were created by the Waymo-to-iseAuto transfer learning fusion model.

Camera images were stored as PNG files with the original resolution 4240x2824. LiDAR-projections were stored ad PKL files, where the perspective projection method was used to project the LiDAR points to the camera plane. The points that fall into the camera’s field-of-view were selected, corresponding 3D and camera coordinates were saved in pickle files. There is a detailed description about this part in our paper.

The vehicle and human annotations were made based on the camera images. Human annotators selected and masked the contours of vehicles as green (r,g,b = 0,255,0), humans as red (r,g,b = 255,0,0). There are also processed grey-scale annotation images in the dataset. The vehicle (green) pixels’ value changed to 1 and human (red) pixels’ value changed to 2, all the rest pixels’ value is 0. This is only for the metric calculation in our testing procedure .

Annotation-rgb and Annotation-grey images were all saved as PNG files.

For nighttime data, we provided lidar-camera projection images for better visualisation.

The LiDAR points were upsampled and projected into the camera images.

These images were used to figure out the objects for human annotators when it is hard to see the objects

clearly in camera images because of the poor illumination condition.

Please note that the same frame of LiDAR, camera, annotation, and lidar-camera-projection data

follow the same name convention (‘sequence number’_’frame number’) except the extensions.

Inside the ‘splits’ folder,

there are text files that contain the path of the data that was used in training, validation and testing.

We emphasise again here that these are specifically for the experiments in our paper

.

The detailed description of the iseAuto dataset split can be found in paper.

The ‘norm_data’ folder contains the information that we used to normalise the Waymo and

iseAuto LiDAR data. As analysed in our paper, this is a critical process for our models to get reliable output.

The bag files of all subsets are sorted by their creation sequences,

which correspond to the sequence orders of the PNG and PKL files.

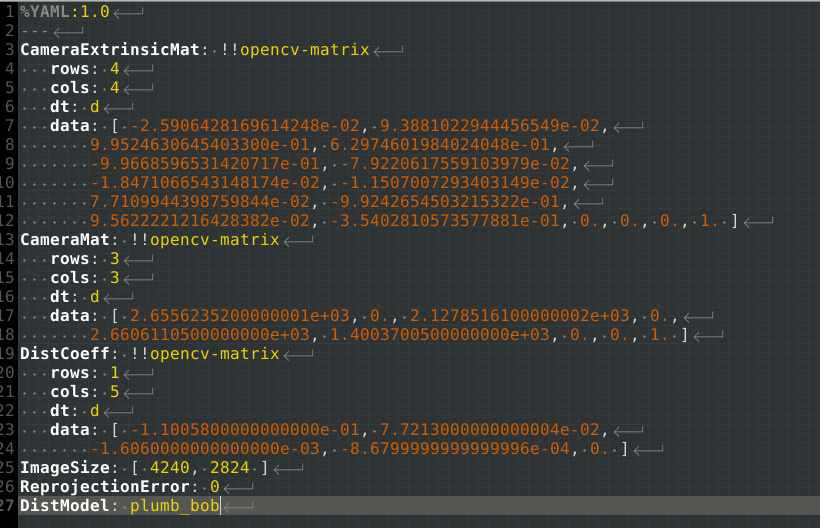

The camera-LiDAR calibration of the iseAuto shuttle was done by

Autoware Camera-LiDAR Calibration Package.

We have dedicated several calibrations to make sure the result is as accurate as possible.

Here is the YAML file created by Autoware that contains the camera-LiDAR calibration information.

Camera intrinsic matrices can be found in ROS topic

‘/front_camera/camera_info’, which are available in all bag files.

There are md5sum files that contain checksum of all big files.

Once you have downloaded the file, you can check the integrity of the flies by: md5sum -c FILENAME.md5

Make sure the checksum file and the downloaded file stay in the same directory.

Camera-LiDAR calibration information

Verify downloaded files